TL;DR

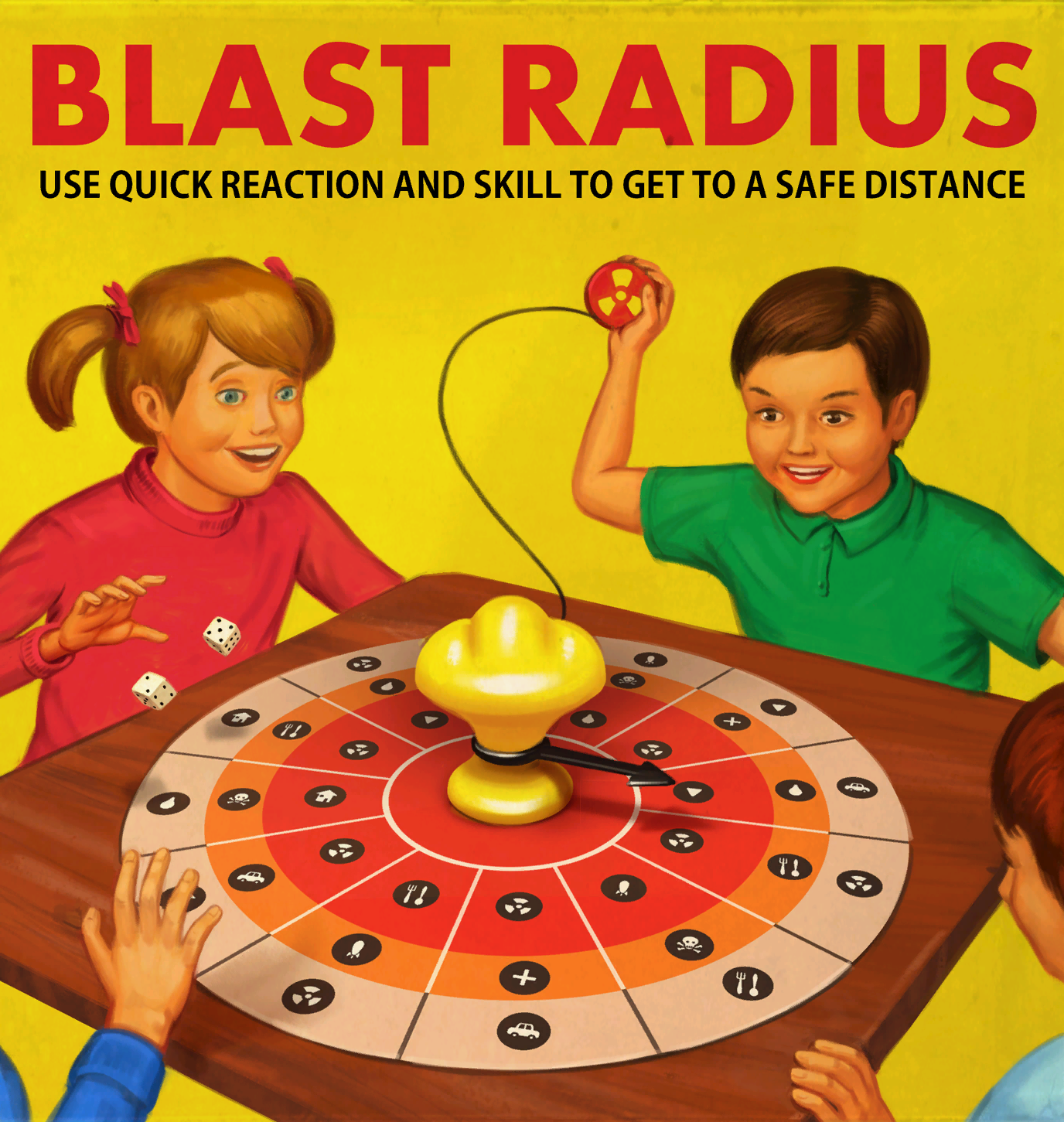

- When you organize your CloudFormation stacks you should try to separate your static/persistent resources from your dynamic/ephemeral resources.

- Chop up your static stacks into multiple smaller ones to furthermore limit blast radius. Approach this with common sense, it’s not your goal to end up with hundred CloudFormation stacks for a small project.

- Try to couple your stacks as loosely as possible. Personally I prefer JSON parameters files stored in Git to achieve this.

Infrastructure as Code (IaC)

This article is not about convincing you to adopt Infrastructure as Code. I assume you’re already at the level where IaC is a non-negotiable. If not, I strongly advise to read about The Twelve Factors App (especially factor one: One Codebase) and to make yourself familiar with GitOps.

How-to organize CloudFormation stacks

As you arrived on this blog post I assume you’re already using AWS CloudFormation or at least there’s an intent to. Being quite experienced in writing CloudFormation, I want to share some of my learnings regarding the organization of CloudFormation stacks.

To kick in an open door: this article is not about syntax. I consider syntax the easy part. The subject I want to focus on in this article is on how-to organize your CloudFormation stacks. Actually, AWS has already done quite a good job writing: AWS CloudFormation Best Practices. It’s a nice article but IMHO it’s missing some emphasis and depth regarding the foremost important paragraph: Organize Your Stacks By Lifecycle and Ownership.

From the paragraph Organize Your Stacks By Lifecycle and Ownership I would like to highlight the following lines:

For additional guidance about organizing your stacks, you can use two common frameworks: a multi-layered architecture and service-oriented architecture (SOA).

A layered architecture organizes stacks into multiple horizontal layers that build on top of one another, where each layer has a dependency on the layer directly below it. You can have one or more stacks in each layer, but within each layer, your stacks should have AWS resources with similar lifecycles and ownership.

With a service-oriented architecture, you can organize big business problems into manageable parts. Each of these parts is a service that has a clearly defined purpose and represents a self-contained unit of functionality. You can map these services to a stack, where each stack has its own lifecycle and owners. All of these services (stacks) can be wired together so that they can interact with one another.

Let’s take a closer look as this description is quite abstract. In the article, AWS mentions two common patterns you could apply: a layered architecture and a service oriented architecture. I would already like to explicitly add that you can apply both a layered architecture and service-oriented architecture at the same time.

A layered architecture and volatility

In addition to the article I like to state that your layered architecture should reflect the volatility of your resources., consider this as the main takeaway of this article. Under all circumstances you should try to separate your static resources from your dynamic resources. The reason to do so is to limit blast radius and your commitment to act within the boundaries of your Recovery Time objective (RTO).

What are static (persistent) resources?

In most setups there’s always a static part. Most often this part consists of your persistence layer (RDS, S3 Buckets, Load Balancers, SNS, SQS, …). Roughly all resources that barely change. By nature these static resources are also a lot harder to change, mainly because they hold persistent data or because they are tied to other resources which are sometimes even managed outside your context.

What are dynamic (ephemeral) resources?

On the other end of the spectrum you find the dynamic part of your application. I even tend to call it the ephemeral part of setup. Ephemeral resources are easy to throw away and to replace. This part of your setup typically also changes with every trigger of your CI/CD pipeline. Immutable EC2 instances, Containers, Lambda’s and other resources which are easy to throw away and to restore fall under this umbrella.

Setup a Layered architecture in practice

When designing your CloudFormation stacks you basically should continuously challenge yourself with the following questions: “If things go really wrong, how do I recover? What’s the impact? What is the time needed to fix everything?”

Basically what you have to envision is a stack to fail while updating leaving you in an unrecoverable state. Read: your only way out of a failing state is to throw away your CloudFormation stack and to re-install it from scratch. Yes, this happens from time to time, so learn to deal with it :-)

Blast radius

Limiting blast radius in this context is highly related to probability. Allow me to explain: having your static resources in a separate stack has the huge advantage of limiting probability of high impact issues. Since all the static resources live in separate stack, the pipeline to maintain that stack doesn’t need to be triggered very often. Add the fact that separating resources itself already limits blast radius. For those reasons I separate my static resources in multiple smaller stacks, just with the purpose of limiting blast radius as much as possible.

In contrast to your static resources, your dynamic resources are very volatile and are living in a separated stack which updates on every commit. Because this pipeline triggers updates at a much faster pace the chance of errors is much higher. This is the reason why a stack like this should be approached as being ephemeral. You should be able to throw away an ephemeral stack with almost no impact and a simple pipeline re-trigger should be able to re-create your ephemeral stack from scratch.

Layered architecture and coupling

By dividing your CloudFormation stacks you will create stack dependencies. My personal approach to link these stacks together stands very much in contrast with the recommendation from AWS to “Use Cross-Stack References to Export Shared Resources”. For me that method of coupling is just too tight.

There’s no way around coupling your CloudFormation layers together but as a rule of thumb you should try to couple as loosely as possible. My preferred way to couple the layers is by using ordinary stack parameters in conjunction with JSON parameter files. The advantage is twofold, the coupling is less tight but the biggest advantage lies in having the parameter files under source control. Having the parameter files in Git gives you a nice traceback regarding changes and updates.

An example to make it less abstract

To wrap up, here’s an example of the discussed pattern applied on a common application.

Imagine an application containing a webserver running on EC2, a REST Api Gateway based on Lambda, a RDS, an Elastic Load Balancer and some SNS Topics. For that given setup your CloudFormation stacks could look like this:

Persistent stacks:

- cfn-persistent-pipeline.yaml (runs only once in while)

- cfn-persistent-rds.yaml

- cfn-persistent-alb.yaml

- cfn-persistent-sns.yaml

Ephemeral stacks:

- cfn-ephemeral-pipeline.yaml (runs on every application commit):

- acc-config.json (link to the persistent stack)

- prod.config.json (link to the persistent stack)

- cfn-ephemeral-serverless.yaml

- cfn-ephemeral-webserver.yaml

A word on CI/CD

While the mono vs multi repository discussion is worth a topic on its own I’m forced to briefly touch the subject regarding the discussed pattern.

The pattern of persistent and ephemeral stacks require each having their own pipeline running at a different interval. The persistent pipeline is barely triggered while the ephemeral pipeline is triggered for every single commit. You could easily achieve a two pipeline setup running at a different pace with a multi repo setup: one repo holding your persistent resources and one repo holding your ephemeral resources, each containing its own pipeline.

On the other hand, the features of CodePipeline (were) are somewhat limited and that makes a mono repo setup a tad harder (even impossible till recently I think) I guess it’s now possible due to the recently announced step function action type for CodePipeline although I still need to try that setup out myself…

Enjoy and until next time!